Article Goal: Explain Why Businesses and Developers Might Want to Try BDD

This article will explain the distinctions between TDD and BDD and will show how using the BDD approach, as designed, should lead to:

- Reduced costs due to higher fidelity specifications and communications

- Reduced timelines due to less requirements churn and closer business and development alignment

- Increased productivity due to the executable nature of BDD specifications

Test-Driven-Development

As a working definition of Test-Driven-Development from http://en.wikipedia.org/wiki/Test-driven_development is:

“Test-Driven-Development (TDD) is a software development process that relies on the repetition of a very short development cycle: first the developer writes a failing automated test case that defines a desired improvement or new function, then produces code to pass that test and finally refactors the new code to acceptable standards. Kent Beck, who is credited with having developed or 'rediscovered' the technique, stated in 2003 that TDD encourages simple designs and inspires confidence.

Test-driven development is related to the test-first programming concepts of extreme programming, begun in 1999,[2] but more recently has created more general interest in its own right.”

Scott Ambler also provides a great summary here: http://www.agiledata.org/essays/tdd.html.

Test-Driven-Development Workflow Diagram

The mantra of Red, Green, Refactor is well-known by developers. It looks like this:

Why and When To Practice Test-Driven-Development

It’s important to look at the complete historical, and current context, of Test-Driven-Development to understand its most-appropriate, and less appropriate usage.

Kent Beck, mentioned above as the most prominent formalizer of the practice, states this in his blog post “Where, Oh Where to Test?” at http://www.threeriversinstitute.org/WhereToTest.html:

“Cost, Stability, Reliability

On reflection, I realized that level of abstraction was only a coincidental factor in deciding where to test. I identified three factors which influence where to write tests, factors which sometimes line up with level of abstraction, but sometimes not. These factors are cost, stability, and reliability.

Cost is an important determiner of where to test, because tests are not essential to delivering value. The customer wants the system to run, now and after changes, and if I could achieve that without writing a single test they wouldn't mind a bit. The testing strategy that delivers the needed confidence at the least cost should win.

Cost is measured both by the developer time required to write the tests and the time to execute them (which also boils down to developer time). Effective software design can reduce the cost of writing the tests by minimizing the surface area of the system. However, setting up a test of a whole system is likely to take longer than setting up a test of a single object, both in terms of developer time and CPU time.”

More recently, he clarified the historical context of TDD with his post “To Test or Not to Test? That’s a Good Question”: http://www.threeriversinstitute.org/blog/?p=187

“Turns out the eternal verities of software development are neither eternal nor verities. I’m speaking in this case of the role of tests.

Once upon a time tests were seen as someone else’s job (speaking from a programmer’s perspective). Along came XP and said no, tests are everybody’s job, continuously. Then a cult of dogmatism sprang up around testing–if you can conceivably write a test you must.By insisting that I always write tests I learned that I can test pretty much anything given enough time. I learned that tests can be incredibly valuable technically, psychologically, socially, and economically. However, until recently there was an underlying assumption to my strategy that I wasn’t really clear about.”

In this post, Kent goes on to state this about a product he developed called JUnit Max:

“When I started JUnit Max it slowly dawned on me that the rules had changed. The killer question was (is), “What features will attract paying customers?” By definition this is an unanswered question. If JUnit (or any other free-as-in-beer package) implements a feature, no one will pay for it in Max.

Success in JUnit Max is defined by bootstrap revenue: more paying users, more revenue per users, and/or a higher viral coefficient. Since, per definition, the means to achieve success are unknown, what maximizes the chance for success is trying lots of experiments and incorporating feedback from actual use and adoption.”

He later continues:

“When I started Max I didn’t have any automated tests for the first month. I did all of my testing manually. After I got the first few subscribers I went back and wrote tests for the existing functionality. Again, I think this sequence maximized the number of validated experiments I could perform per unit time. With little or no code, no tests let me start faster (the first test I wrote took me almost a week). Once the first bit of code was proved valuable (in the sense that a few of my friends would pay for it), tests let me experiment quickly with that code with confidence.

Whether or not to write automated tests requires balancing a range of factors. Even in Max I write a fair number of tests. If I can think of a cheap way to write a test, I develop every feature acceptance-test-first. Especially if I am not sure how to implement the feature, writing a test gives me good ideas. When working on Max, the question of whether or not to write a test boils down to whether a test helps me validate more experiments per unit time. It does, I write it. If not, damn the torpedoes. I am trying to maximize the chance that I’ll achieve wheels-up revenue for Max. The reasoning around design investment is similarly complicated, but again that’s the topic for a future post.

Some day Max will be a long game project, with a clear scope and sustainable revenue. Maintaining flexibility while simultaneously reducing costs will take over as goals. Days invested in one test will pay off. Until then, I need to remember to play the short game.”

Analysis of Kent Beck’s Cost-Benefit Based Strategy

If you read Kent’s posts carefully you will see that he made careful cost-benefit analyses whenever he decided to use or not use a TDD approach. When he developed JUnit Max, he chose to get the product features to the market as soon as he could, and then once he had an idea what customers wanted, he stabilized many of those features with tests.

So, what motivated Kent was a practical, reasonable, and important concern: financial viability and return-on-investment.

I thik it’s very important to be fully aware of these factors when deciding when and where to use TDD in any project. One of the main drivers of agile or Lean thinking is to optimize the whole, not necessarily individual parts all at once. Because of this, one must think about the larger context of a project.

Financial Investment Necessitates a Nuanced Approach to Development Practices

When thinking about the larger context, it’s easy for a developer to say something like: “We need this code to be well-factored, test-driven, and easy to maintain.” While this absolutely should be the goal of a developer, developers must also be cognizant of the larger context. Almost always, that larger context is that there is a financial investment in the project and the project’s success. And, overwhelmingly more often than not, there is a specific timeline involved. Thus, it cannot ever be solely the developer’s responsibility to set the acceptance criteria for a system’s completion or its acceptable level of quality. Developers and project managers must present risks and tradeoffs to business stakeholders and collaborate to deliver value that is sufficient and as risk-averse as is necessary to the business stakeholders.

Finding the Correct Balance Takes Careful Thought and Collaboration

I cannot give specific recommendations in this article about any particular situation. Rather, I would recommend that all risks and tradeoffs be written down, categorized, and reviewed with key stakeholders in an open and honest fashion. If a product owner would favor a simpler, less glitzy UI in favor of core, sound, well-tested and stable backend services, then the team must focus on delivering this. On the other hand, if a product owner says that customers will be turned off by a UI that is vapid and barren, then the team must work hard to achieve that.

Building the correct features of any system is, as Kent Beck mentions, a very experimental process when the goal is not known. Because of this, it’s important to use practices that help in driving toward the correct features in the quickest fashion. Sometimes that approach is careful, stict adherence to TDD. Sometimes it is not. It takes judgement and careful balancing of all factors between business people and technical people to make the correct decisions.

Problems That Teams Can Face When Practicing TDD

In my experience, projects can get into trouble when project leaders do not make these nuanced decisions about TDD that require careful understanding, analysis, and collaboration amongst the entire team. In a project without a schedule, then it might make sense to practice a strict form of TDD. In projects that have specific dates set in stone, a team must make decisions about when and where to apply strict TDD and when and where to accept technical debt in order to meet those dates.

Delivering Business Value and Mitigating Project Risk Through Behavior-Driven-DevelSopment

It might come as no surprise by the title of this article, that others much more learned in these practices and problems than I have sought remedies. Namely, Dan North and Dave Astels have written and presented widely on Behavior-Driven-Development (BDD) and its close aligntment to business success criteria. The rest of this article will introduce BDD and provide links to sites, videos, presentations, and a sample project in .NET by Rajesh Pillali that provide extensive information.

Introducing Behavior-Driven-Development

As stated on http://behavior-driven.org, the definition of BDD is:

“Behaviour-Driven Development (BDD) is an evolution in the thinking behind Test-Driven-Development and Acceptance-Test-Driven-Planning.

It brings together strands from Test-Driven-Development and Domain-Driven-Design into an integrated whole, making the relationship between these two powerful approaches to software development more evident.

It aims to help focus development on the delivery of prioritised, verifiable business value by providing a common vocabulary (also referred to as a Ubiquitous Language) that spans the divide between Business and Technology.”

Dan North’s original formulation of BDD is here: http://blog.dannorth.net/introducing-bdd/. Dave Astels clearly explains it more succinctly in his own article here: http://techblog.daveastels.com/files/BDD_Intro.pdf

Business Analysts Write Executable Specifications When Teams Practice Behavior Driven Development

Here is an example BDD feature specification from the SpecFlow project’s web site:

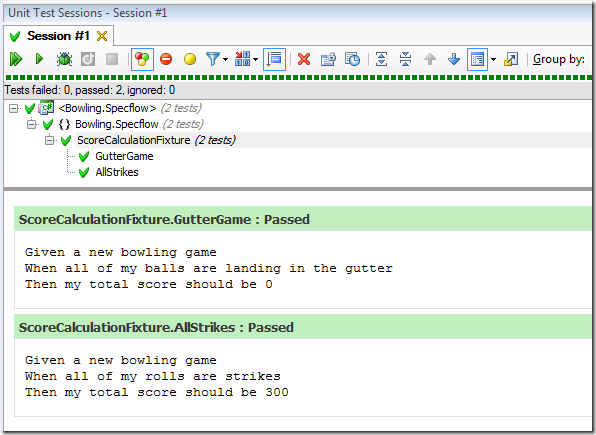

There are other “Steps” in the worflow, but here is how the ultimate fulfillment of that specification gets executed and measured:

TODO:

Complete article

Complete Examples

Rajesh Pillali provides an excellent article on CodeProject here: http://www.codeproject.com/KB/architecture/BddWithSpecFlow.aspx

0 comments:

Post a Comment